Big Data Lean Architecture

Big data has helped us in solving some very sophisticated engineering problems. But in turn, as those who work with complex data know, it also creates architectural challenges. At PlaceIQ, it’s critical that we properly orchestrate the big data technologies that are available in order to maintain, and optimize, the high scale dataset we manage.

These architectural challenges are complex, less documented, and often proprietary. Our team is working with resource intensive location data and also constantly building new products that are customized by clients. We have been working to solve these complexities for 10 years and have created an architecture that is large scale, stable and economical — allowing us to stay flexible for our clients and the changes ahead in our industry. This post summarizes our architectural decisions.

Edge Complexity

There is so much inherent complexity in big data. Today there are a lot of great technologies, many of them open source. These technologies are quite complex. A lot of complexity is introduced at the edge, in getting the different technologies talking with each other.

Example Edge Complexity

Common issue: You have written an application, and to make it reliable and cross platform you put it in Docker. You use Terraform to spin up a virtual machine hosting docker. Maybe you use Kubernetes to make the different Docker virtual machines talk with each other. If you are running in a public cloud you have to put a lot of effort into setting up private subnetworks.

Fighting Complexity Within

PlaceIQ has made the decision that fighting this complexity is at the core of maintainable and reliable software. We have always used best of breed technologies, e.g. Scalding, HIVE and PIG. But when these got eclipsed by Spark we devoted engineering resources to rewriting earlier Scalding, HIVE and PIG code to Spark. We used Cassandra, the NoSQL database, but when it was no longer essential, we took it out.

Product Information

PlaceIQ Movement

Develop smarter marketing strategies and drive real-world consumer actions by using PlaceIQ’s proprietary visitation data to analyze consumer movement, on your own terms.

Main Tech Stack

We currently have a quite minimal tech stack. It is optimized for reliability, high performance and ease of configuration for clients.

- Spark with DataFrames written in Scala is the main workhorse

- Azkaban with a little Python for workflows

- Java with Spring Boot for REST service

- Postgres for internal database

- Snowflake as a way to share data with clients

- ElasticSearch to do real-time queries on large datasets

- Private datacenter running Hadoop YARN

- S3, Azure, & GCP store for delivery to client

Below, I’ll share a brief elaboration on some of our choices.

Spark with DataFrames

Spark is dominating data engineering. Alternative technologies are Scalding, HIVE and PIG.

Spark and Scalding both started as Scala libraries to turn the convoluted Hadoop Java code into clean functional programming. HIVE and PIG gave a SQL / declarative interface to Hadoop.

What really made Spark stand out was when it added DataFrames that you could query with SQL. Now, Spark combined two very powerful programming paradigms – functional programming and declarative programming – combining the advantage of Scalding, HIVE and PIG.

val centroid = udf { geometry:String =>

GeoService.roundedPolygonCentroid(geometry)

}

df.where(size($"ccs_scored") > 1)

.select(

$"device_key",

explode($"ccs_scored") as "cluster"

).filter($"cluster.scores"("homeDwellNumDays") > numDaysThresh)

.select($"device_key",$"cluster.features_scored"(0)

.getField("feature_id") as "feature_id")

.join(bm, $"id" === $"feature_id")

.select($"device_key",centroid($"geometry") as "centroid")

.groupBy("device_key")

.agg(collect_list($"centroid") as "dwells")

.as[DeviceDwells]

Here is a complex operation. It is doing SQL join, using external geo libraries and complex data types. Spark SQL lets you do this without any ceremony.

We run all production code as Scala Spark, and only release code after running 15 minutes of unit tests.

Workflow Azkaban vs. Airflow

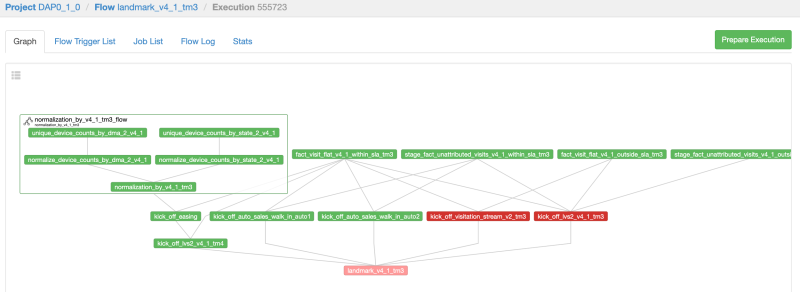

We chose Apache Azkaban as our web based workflow, where we schedule and run all our flows. Azkaban lets us rerun flows with the same parameters.

The main alternative is Apache Airflow, but Azkaban is substantially simpler.

In both Azkaban and Airflow you define workflows as DAGs, directed acyclic graphs, of jobs that you want to run.

In Airflow you define your DAG as Python code. Your code is closely coupled to the Airflow code. The Airflow library is frequently updated. The workflow is running code, so migrating to a new version of Airflow is hard. You usually run Airflow in Docker Compose or Kubernetes to have enough compute power to run Python scripts.

In Azkaban you just upload a azkaban.zip file with a simple definition of the graph. Each job is just a link to a parent job and a bash command. Azkaban is stable and easy to maintain. It is very limited in what it can do, but we decided to consolidate our logic to Scala Spark.

MVC Architecture

Most GUI programming is based on the design pattern called MVC, Model View Controller. We found that this extra structure makes our code more organized and maintainable. Here is an example of what our MVC code looks like.

def locationTaxonomyRefresh(placeTaxDate: ZonedDateTime,

runDate: ZonedDateTime)

(implicit env:Env): Unit = {

implicit val spark: SparkSession = env.spark

val runDateStamp = DateStamp(runDate)

for {

locations <- valueStep(env.dao.landmarkV4_1.dimLocationIntermediate.load(runDateStamp))

placeTaxonomy <- valueStep(env.dao.basemap2_1.placeTaxonomy.load(DateStamp(placeTaxDate)))

locTaxonomies <- PlaceTaxonomyTransformationStep(placeTaxonomy, locations, runDate)(spark)

}

env.dao.landmarkV4_1.dimLocationTaxonomy.store(locTaxonomies, runDateStamp)

}

A big advantage of MVC is that it lets us write unit tests for the logic part of our flow. This is essential for maintaining high quality while having a quick release cycle.

In conclusion, maintaining a lean and efficient architecture is critical to serving our clients and ensuring our teams are equipped to push big data to new applications and use cases. Is your tech stack set up to handle ingestion of location data? Ask us about it!

Develop smarter marketing strategies and drive real-world consumer actions by using and proprietary visitation data to analyze consumer movement, on your own terms. Read more about our product PlaceIQ Movement.